While computerized information visualizations are just a few decades old, the human practice of encoding information in the color, materials, and forms of physical artifacts (e.g., clay tokens, knotted ropes, weavings) has much deeper roots, dating back thousands of years. In the future, the complex, intertwined relationships between humans, computers, and information will manifest in a myriad of exciting ways across the Virtuality-Reality continuum. Information visualizations will, of course, maintain the power, flexibility, and scalability of contemporary data-intensive computing, but they will also increasingly return to physical human capabilities for interacting with information, and each other.

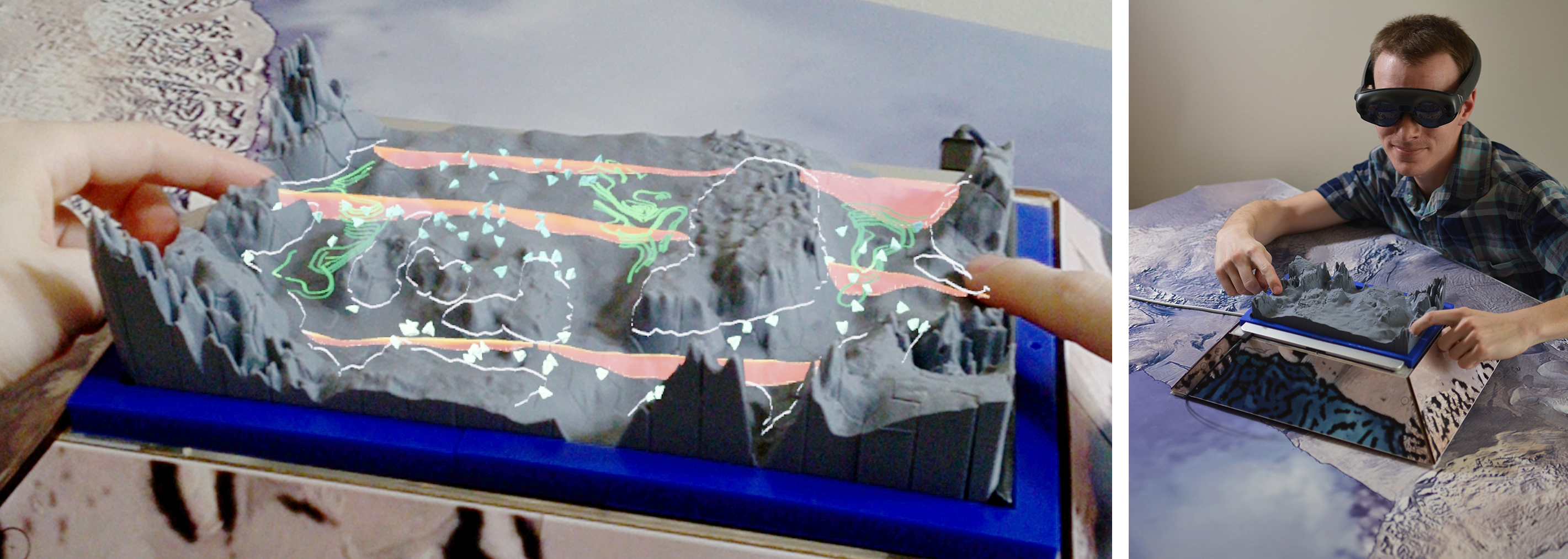

Associate Professor Daniel Keefe (Computer Science and Engineering) is working on a project called “Environmental Informatics in Extended Reality,” that invents and studies this future by creatively combining emerging technologies (e.g., AR headsets, 3D scanners, and printers) with traditional art and design techniques to better understand modern environmental science data, including supercomputer simulations of oceans, fires, and more. New, multi-material 3D printing technologies are used to output physical data visualizations that look and feel like wood grain, glass, crystal, and sponge, and other materials, and lab-based user studies will explore the relative merits of these hybrid digital+physical visualizations compared to today's standard digital data visualizations.

This project recently received a Research Computing Seed Grant.